Introduction

A new paradigm is reshaping business analytics. Instead of the traditional build a dashboard, then interpret it approach, organizations are moving toward interactive narrative analytics; a model where users begin with natural-language questions, the system retrieves governed and trusted facts through retrieval-augmented generation (RAG), and large language models (LLMs) synthesize explanations that users can probe, refine, and challenge. The experience evolves from static charts and filters into a continuous analytical dialogue: what happened, why it happened, what may happen next, and what actions to take—all anchored in a shared semantic layer and consistent metric definitions.

In this shift, LLMs redefine the user interface, RAG redefines trust, and MCP servers redefine integration. RAG constrains generative models to validated enterprise sources—datasets, KPIs, subject areas, and documentation—ensuring transparency and traceability of insights. MCP (Model Context Protocol) then provides a standardized, secure way for AI agents to invoke analytics tools and services. Together, these technologies transform analytics from something users passively consume into something AI agents can actively operate, enabling richer, more exploratory, and story-driven insights that dynamically generate explanations, visuals, anomaly summaries, and follow-up questions on demand—without the need to constantly redesign dashboards.

How Oracle Analytics enables a new analytics mindset

Oracle Analytics Cloud (OAC) is actively aligning with this shift by introducing an OAC MCP server, which acts as a standardized bridge between MCP-compatible AI clients and OAC’s governed analytics assets. Through natural-language interactions, AI clients can securely query and explore enterprise analytics content or invoke OAC tools and APIs. Crucially, this is done while preserving enterprise-grade controls such as OAuth-based authentication and full adherence to user-level permissions, ensuring that every response remains consistent with OAC’s security and governance model.

In practical terms, this allows OAC to become part of the narrative analytics workflow beyond the boundaries of its native UI. By registering the OAC MCP server with AI clients—such as conversational assistants or developer tooling—organizations enable AI agents to retrieve governed metrics, summarize workbook insights, or answer contextual questions like what does this KPI represent and why did it change? All of this happens while staying anchored to OAC’s semantic layer, metric definitions, and access rights, effectively extending trusted analytics into conversational and agent-driven experiences.

Simple example

How? Let’s take a look at one simple example.

Let’s start our analysis with a simple request use sales data, entered in the

Claude chat box.

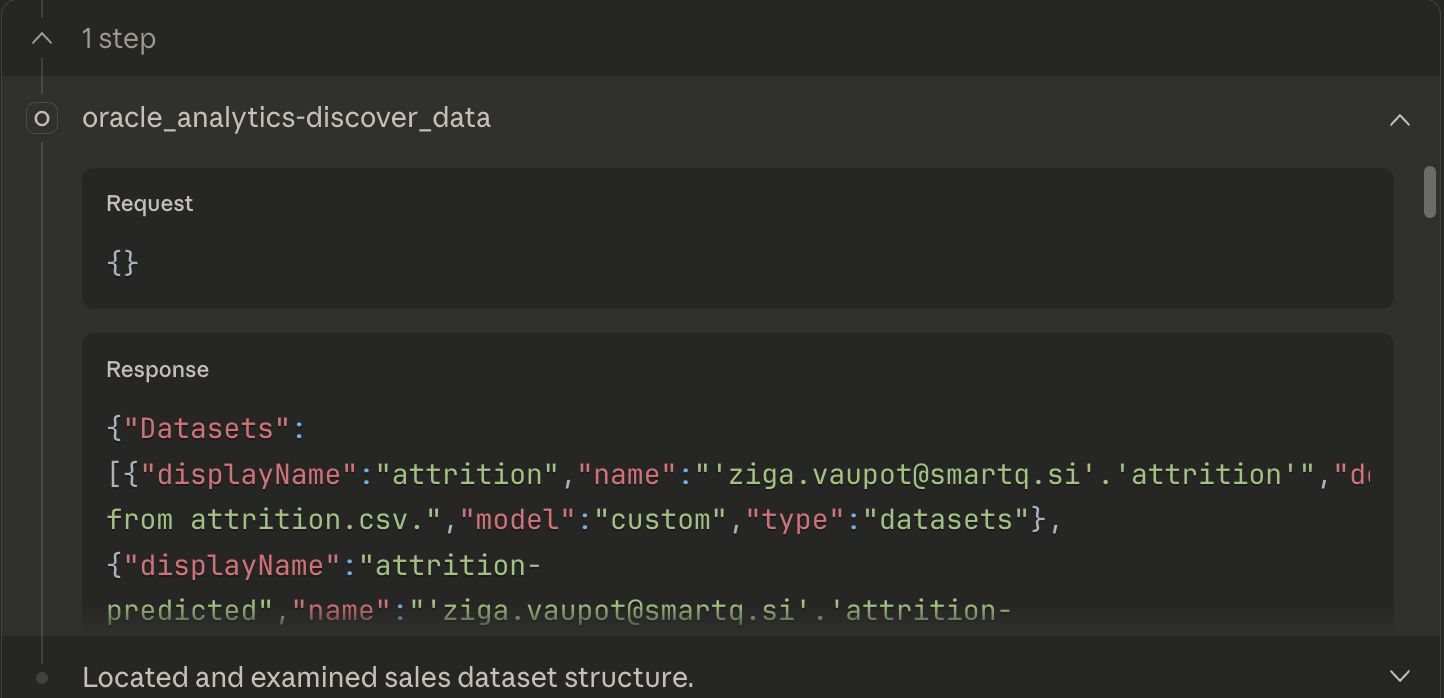

Data analysis begins with discovery and description of the data. A request to obtain available datasets in an Oracle Analytics instance is issued:

The response is a JSON payload (note: the list of datasets is much longer; here I’m showing only Sales Data):

{

"Datasets": [

{

"displayName": "Sales Data",

"name": "'ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA'",

"description": "Uploaded from SAMPLE_SALES_DATA.xlsx.",

"model": "custom",

"type": "datasets"

}

]

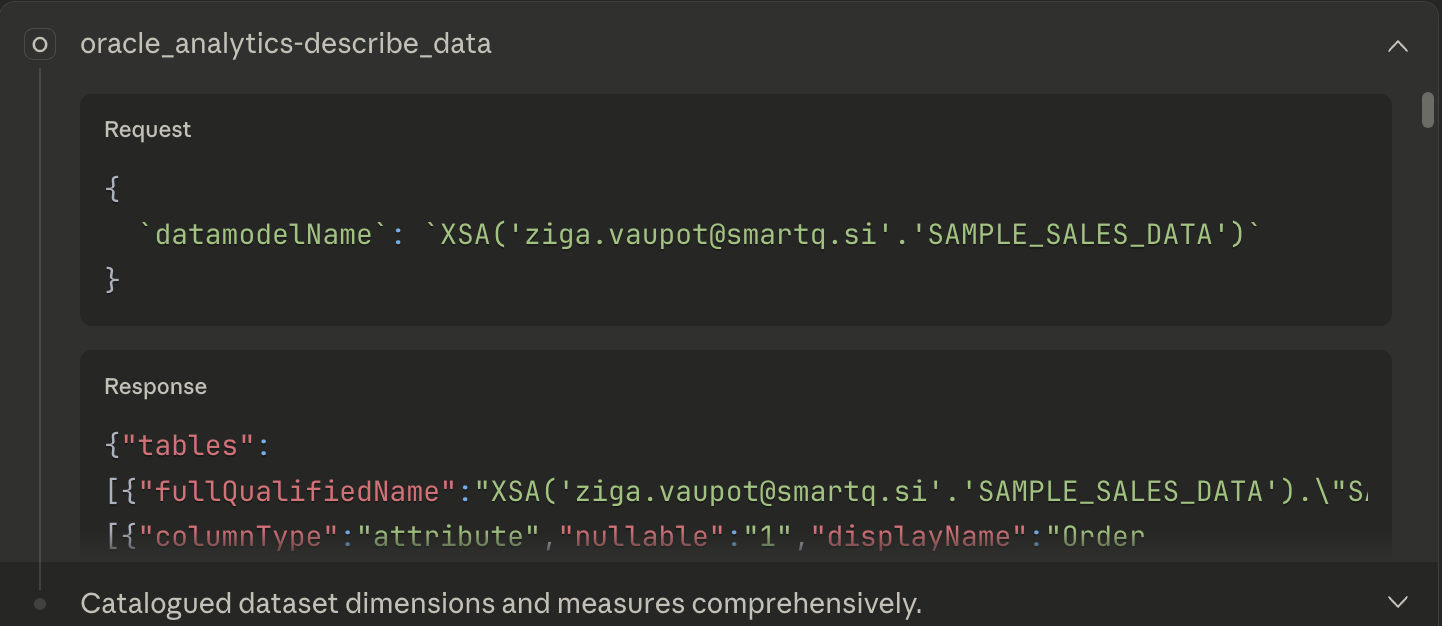

}The dataset has been identified, so the next step—describing it automatically—begins:

Response:

{

"subjectArea": "XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA')",

"tables": [

{

"tableName": "SAMPLE_SALES_DATA",

"fullyQualifiedName": "XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA').\"SAMPLE_SALES_DATA\"",

"columns": {

"attributes": [

{ "name": "Order Key", "displayName": "Order Key", "dataType": "NUMERIC", "nullable": true },

{ "name": "Order Status", "displayName": "Order Status", "dataType": "VARCHAR", "length": 17, "nullable": true }

/* ... trimmed for readability in the article ... */

],

"measures": [

{ "name": "Units", "displayName": "Units", "dataType": "NUMERIC", "nullable": true },

{ "name": "Revenue", "displayName": "Revenue", "dataType": "NUMERIC", "nullable": true }

/* ... */

]

}

}

]

}Based on this response, the final response is formulated:

- Revenue, Units, Discount Value, Cost Fixed, Cost Variable

- Product, Prod Brand, Prod Lob (Line of Business), Prod Type

- Cust Name, Cust Segment, Cust Type, Cust Gender, Cust Marital Status, Cust Credit Rate

- City, State Prov, Region, Country Name, Area

- Time Bill Dt, Time Paid Dt

- Channel Name

- Order Key, Order Status

- Revenue by product brand or region

- Top customers by revenue

- Sales performance by channel

- Customer segment analysis

- Time-based trends

We will continue with a more general question and a request to analyze revenue:

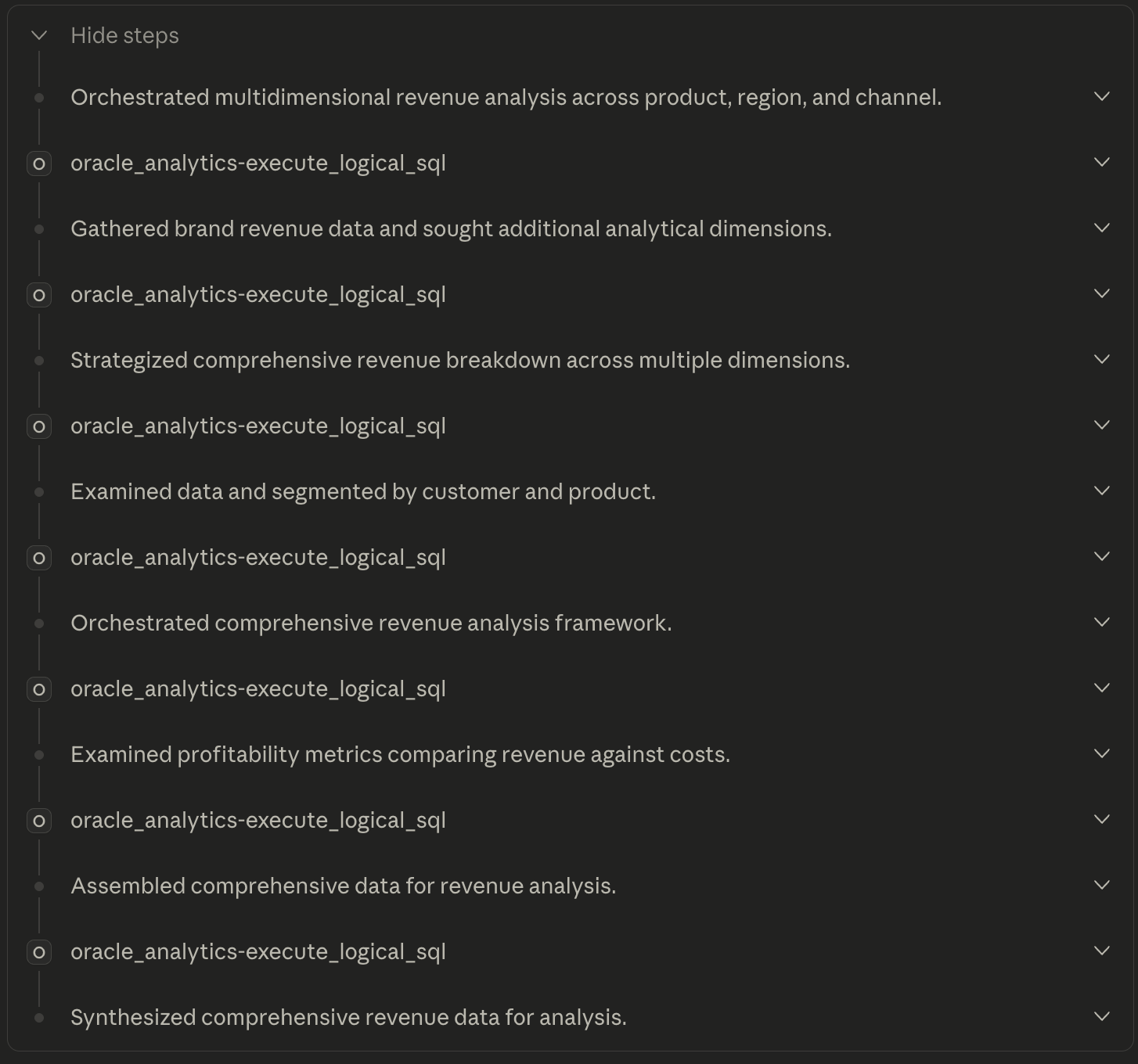

Now the MCP server begins a deeper analysis. It creates an analysis plan—similar in spirit to Auto Insights in Oracle Analytics.

Let’s examine the first couple of steps.

In the first step (Orchestrated multidimensional revenue analysis across product, region and channel), the MCP server explains its thought process:

Next, it starts generating requests and gathering responses. For example, in the first oracle_analytics_execute_logical_sql request, the MCP server generates the following request:

{

"query": "SELECT\n XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA').\"SAMPLE_SALES_DATA\".\"Prod Brand\" AS llm_0,\n SUM(XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA').\"SAMPLE_SALES_DATA\".\"Revenue\") AS llm_1\nFROM XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA')\nGROUP BY XSA('ziga.vaupot@smartq.si'.'SAMPLE_SALES_DATA').\"SAMPLE_SALES_DATA\".\"Prod Brand\"\nORDER BY llm_1 DESC\nFETCH FIRST 20 ROWS ONLY"

}And Oracle Analytics responds with this response:

{

"metadata": {

"requestId": "5db5d3d4-7a98-4c89-a5a1-c9da0ff44f57",

"batchSize": 1000,

"startTime": 1766162116326

},

"batches": [

{

"complete": true,

"cursorId": "1ffcb9df-acc0-4020-9f18-51f3aa6eabf1",

"batchNumber": 1,

"data": [

{ "llm_0": "FunPod", "llm_1": 2047226.99 },

{ "llm_0": "BizTech", "llm_1": 2032292.78 },

{ "llm_0": "HomeView", "llm_1": 1013841.4 }

],

"hasMore": false,

"totalRecordsStreamed": 3,

"isComplete": true,

"recordsInBatch": 3,

"timestamp": 1766162116329

}

],

"status": {

"result": "success",

"error": false,

"message": "Streaming completed successfully"

},

"summary": {

"completed": true,

"totalBatches": 1,

"totalRecords": 3,

"endTime": 1766162116331,

"durationMs": 5

}

}In the third step, the thought process continues: Good, I have revenue by brand. Let me get more dimensions for a comprehensive analysis.

And so on—until the final step. The result of this simplest analyze data request is a narrative report (and optionally a generated dashboard).

| Metric | Value |

|---|---|

| Total Revenue | $5,093,361 |

| Total Units Sold | 54,941 |

| Total Orders | 5,248 |

| Avg Revenue / Order | $970 |

| Total Costs | $5,101,604 (Fixed: $1.37M + Variable: $3.73M) |

| Total Discounts | $127,519 |

| Brand | Revenue | Share |

|---|---|---|

| FunPod | $2,047,227 | 40.2% |

| BizTech | $2,032,293 | 39.9% |

| HomeView | $1,013,841 | 19.9% |

| Region | Revenue | Share |

|---|---|---|

| EMEA | $2,031,918 | 39.9% |

| Americas | $2,020,962 | 39.7% |

| APAC | $1,040,480 | 20.4% |

| Channel | Revenue | Units | Avg / Unit |

|---|---|---|---|

| Store | $2,867,342 | 31,408 | $91 |

| Online | $1,025,136 | 10,945 | $94 |

| Reseller | $728,994 | 7,517 | $97 |

| Catalog | $471,890 | 5,071 | $93 |

- Profitability concern — Costs nearly equal revenue, indicating thin margins

- Brand balance — FunPod and BizTech perform equally well

- Channel opportunity — Online has room to grow vs Store

- Geographic gap — APAC remains underrepresented

- High-value segment — Active Singles drive the most value

This is very different from what we’ve been used to. You can also request

Visualize these reports, which generates an HTML report like

sales_dashboard.html:

Click here to open: Sales Revenue Dashboard

You can then follow the suggestion: Would you like me to drill deeper into any of these areas—profitability by product, regional trends, or customer behavior?

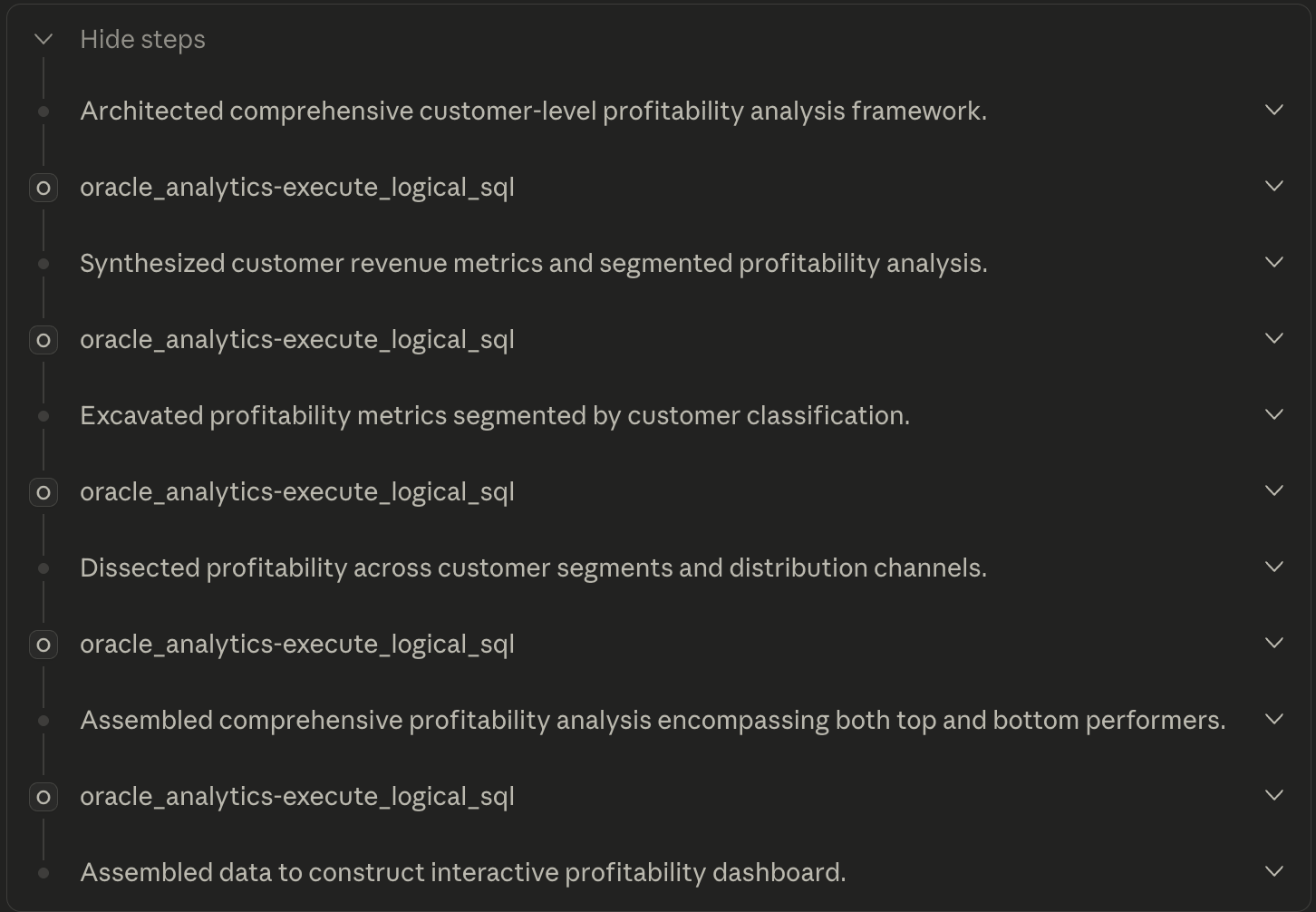

Based on this, the thought process prepares and executes a new plan:

And here is the detailed Customer Profitability Analysis dashboard:

Click here to open: Customer Profitability Analysis

Summary

How cool is that? The tool generates reports and provides explanations and reasoning. No coding required. Just data.

The key to success in enterprise analytics is not just access to data, but well-governed, well-organized data in the warehouse, combined with a comprehensive, shared metadata and semantic model across the organization. This foundation ensures that analyses remain consistent, explainable, and auditable—preventing hallucinations, metric drift, and other anomalies that can undermine trust in AI-driven insights.

The Oracle Analytics Semantic Model is the key ingredient that enables governed, trustworthy analytics at enterprise scale.