In my previous blog posts, we explored this fascinating new technology—how to set it up, the broader analytics paradigm it introduces, and a deep dive into some specific Oracle Analytics functions.

But what about the basics? What about standard business reporting—the business-as-usual activities we deal with every day? For example, the classic financial reports that managers rely on daily to run the business. These are the standard reports that are typically pre-prepared and embedded within dashboards.

Well, let's take a look! I have tested two examples of those standard financial reports:

- Revenue analysis report that compares current year actuals vs. previous year, vs. planned data and calculates year-to-date values.

- Performance report that is providing information about revenue, profit and profit margin for the selected period, ie. half-year.

The process is already known by now. Startup Claude client and start asking questions. When working with OAC MCP Server, OAC login screen pops up if you are starting with your work - later token takes care of authentication.

Performance report

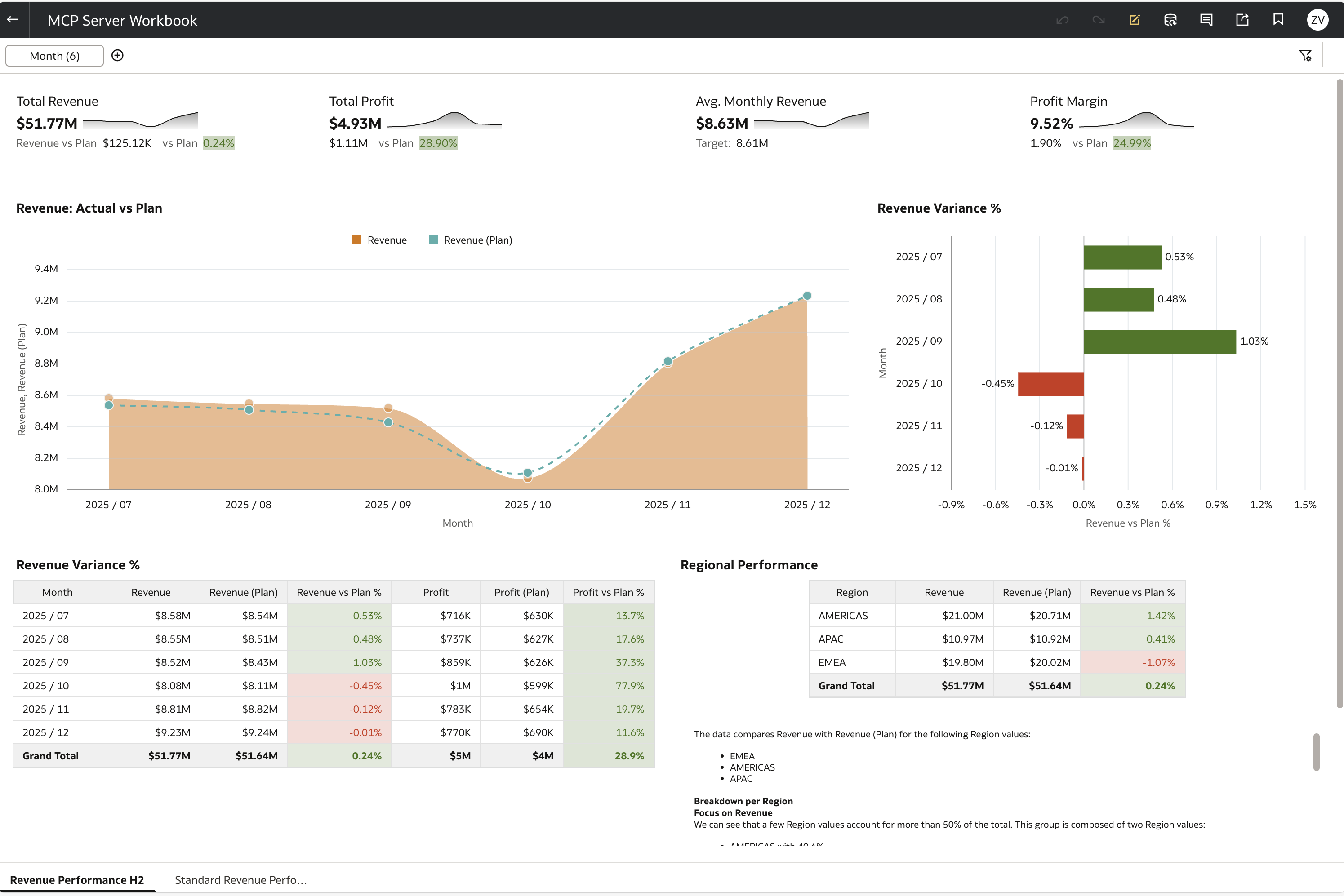

We will start with the performance report, which provides information about revenues and profits for the selected period, in our case for the last six months.

In Oracle Analytics, this report would look like this:

Note: This report was actually developed based on the final result from html page generated by Claude. Another note: It took me approx. 1 hour to create it from scratch.

Generating something similar as above when using Claude as a client, it would take a few minutes (mostly because it generate HTML code which is quite time consuming).

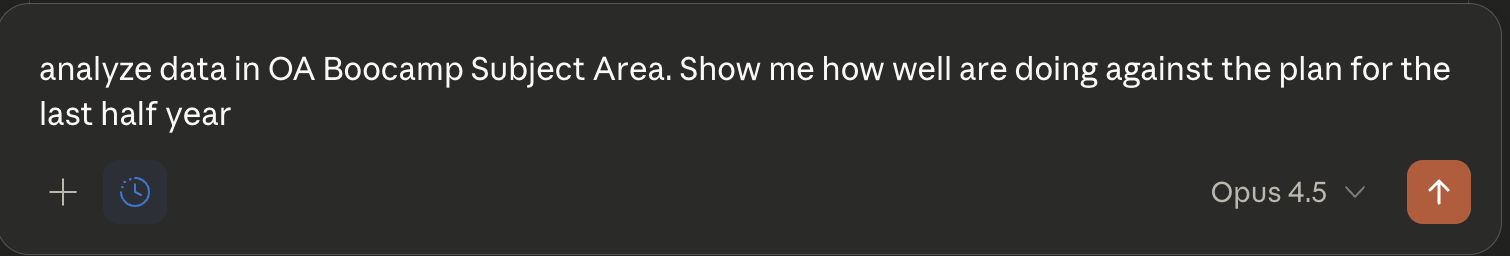

Nevertheless, let's take a look. We'll begin with issuing the following request:

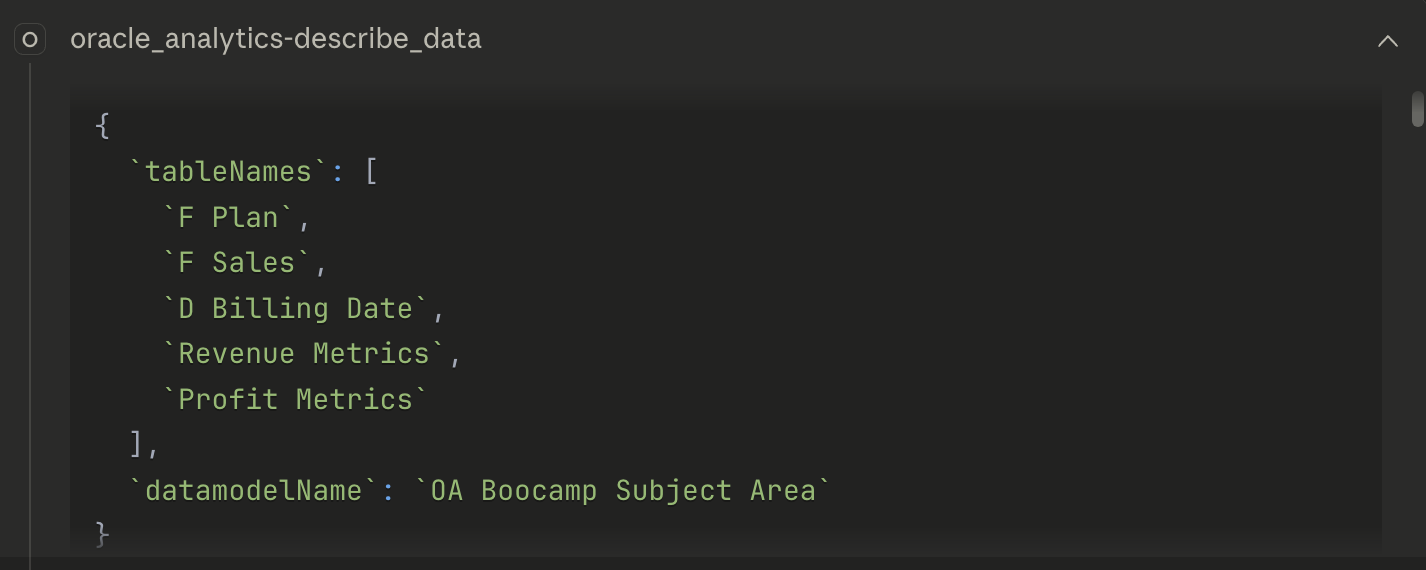

Claude starts thinking and in the 1st step, it calls oracle_analytics-describe_data tool tool to look for a subject area OA Bootcamp Subject Area, which I specified in my request. Subject area has been identified and here is the response:

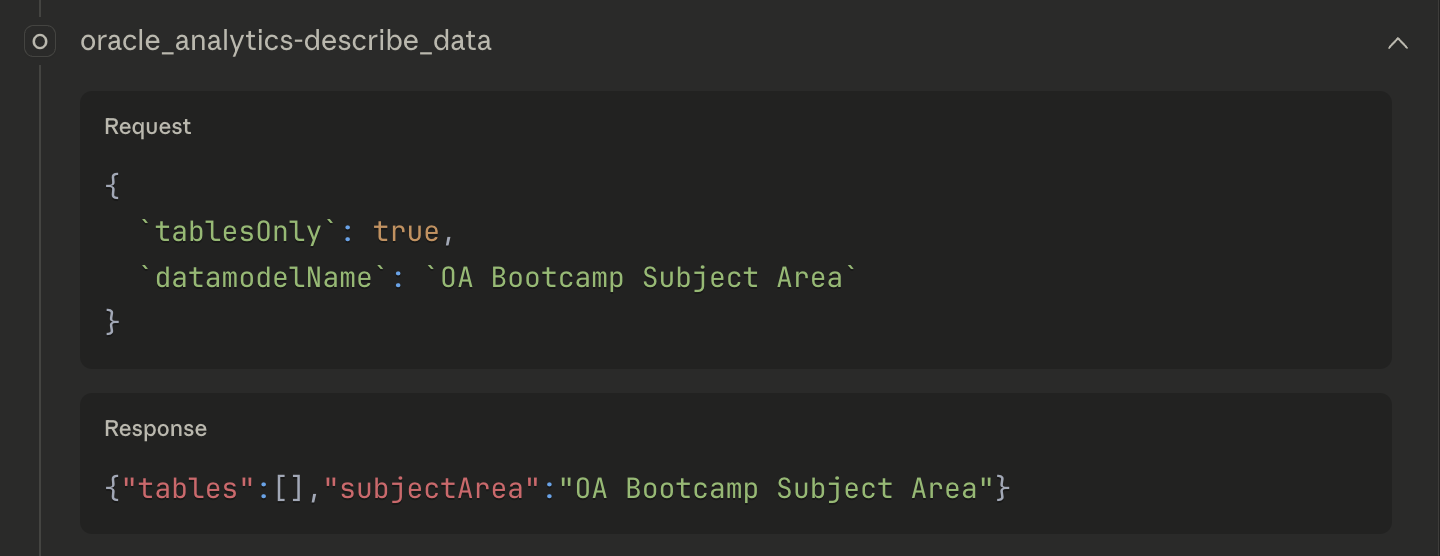

Subsequently, oracle_analytics-describe_data tool has been called again to retrieve details for the subject area:

And here is a complete response in JSON:

{

"tables": [

{

"tableName": "Counts",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"Counts\"",

"description": ""

},

{

"tableName": "D Billing Date",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Billing Date\"",

"description": ""

},

{

"tableName": "D Customers",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Customers\"",

"description": ""

},

{

"tableName": "D Geography",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Geography\"",

"description": ""

},

{

"tableName": "D Orders",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Orders\"",

"description": ""

},

{

"tableName": "D Payment Date",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Payment Date\"",

"description": ""

},

{

"tableName": "D Products",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"D Products\"",

"description": ""

},

{

"tableName": "F Plan",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"F Plan\"",

"description": ""

},

{

"tableName": "F Sales",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"F Sales\"",

"description": ""

},

{

"tableName": "F_REVENUE_ALL_YEARS",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"F_REVENUE_ALL_YEARS\"",

"description": ""

},

{

"tableName": "Profit Metrics",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"Profit Metrics\"",

"description": ""

},

{

"tableName": "Revenue Metrics",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"Revenue Metrics\"",

"description": ""

}

],

"subjectArea": "OA Boocamp Subject Area"

}

Claude has now been able to see that there are two fact tables F Plan and F Sales along side time dimension D Billing Date, which are likely candidates to hold required information. As a next step, relevant columns, for plan vs revenue analysis have been searched for. Hence a new call to oracle_analytics-describe_data tool has been made, this time:

Response is now much longer as all columns have been retrieved for the 5 tables (as JSON is now quite long, only F Plan table is shown below):

{

"tables": [

{

"tableName": "F Plan",

"fullQualifiedName": "\"OA Boocamp Subject Area\".\"F Plan\"",

"columns": [

{

"columnType": "measure",

"nullable": "1",

"displayName": "Profit (Plan)",

"dataType": "NUMERIC",

"precision": "28",

"name": "Profit (Plan)",

"length": "28",

"description": "",

"scale": "0",

"aggregation": null,

"fullyQualifiedName": "\"OA Boocamp Subject Area\".\"F Plan\".\"Profit (Plan)\""

},

{

"columnType": "measure",

"nullable": "1",

"displayName": "Profit Margin (Plan)",

"dataType": "NUMERIC",

"precision": "28",

"name": "Profit Margin (Plan)",

"length": "28",

"description": "",

"scale": "0",

"aggregation": null,

"fullyQualifiedName": "\"OA Boocamp Subject Area\".\"F Plan\".\"Profit Margin (Plan)\""

},

{

"columnType": "measure",

"nullable": "1",

"displayName": "Revenue (Plan)",

"dataType": "NUMERIC",

"precision": "28",

"name": "Revenue (Plan)",

"length": "28",

"description": "",

"scale": "0",

"aggregation": null,

"fullyQualifiedName": "\"OA Boocamp Subject Area\".\"F Plan\".\"Revenue (Plan)\""

}

]

}

],

"subjectArea": "OA Boocamp Subject Area"

}

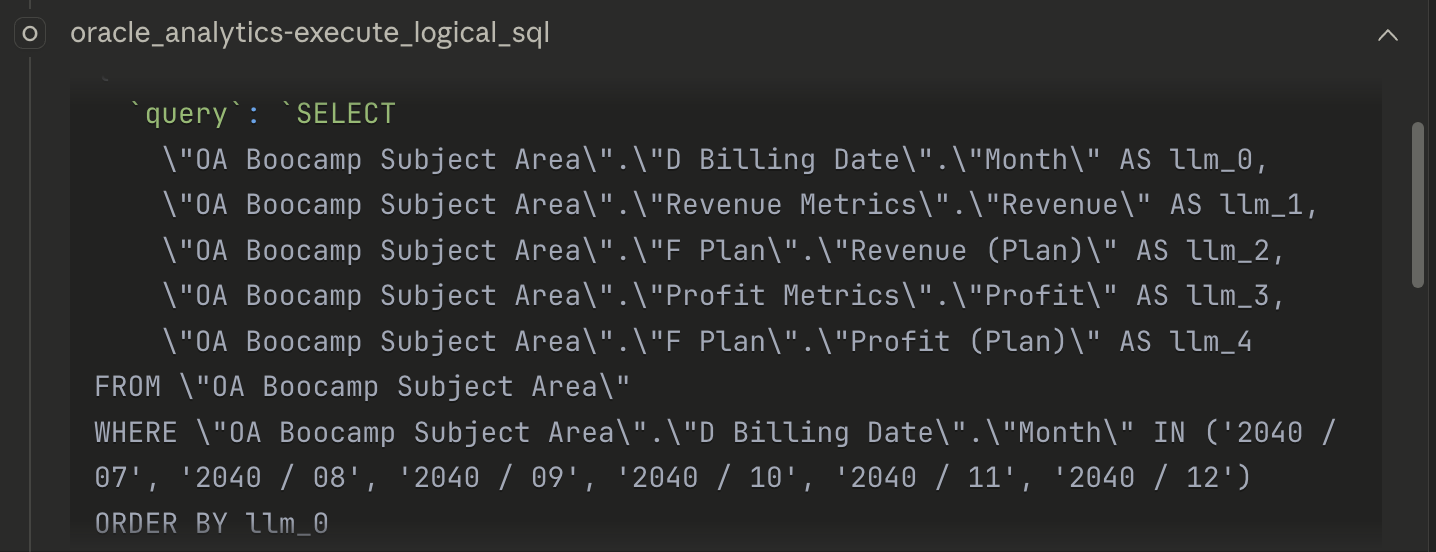

With this, Claude has concluded that it finally understands the data model and that it can now continue with periods analysis. This time it uses second tool from MCP's arsenal, oracle_analytics-exdcute_logical_sql, and the SQL to run is:

{

`query`: `SELECT DISTINCT \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" AS llm_0, \"OA Boocamp Subject Area\".\"D Billing Date\".\"Month\" AS llm_1 FROM \"OA Boocamp Subject Area\" ORDER BY llm_0 DESC, llm_1 DESC FETCH FIRST 20 ROWS ONLY`,

`maxRows`: 20

}

We can see an interesting result as it only queried D Billing Date table witch doesn't give any context related to our request to analyze last 6 months. Result retrieved because of that is:

Good, I can see the data goes up to 2040. Let me now get the last 6 months of data and compare actual vs plan for Revenue and Profit. I'll analyze the most recent 6 months (July-December 2040).

This is obviously wrong, and Claude will realize this within next few steps as the next query through oracle_analytics-exdcute_logical_sql tool wants to retrieve data from that period. Obviously without any success.

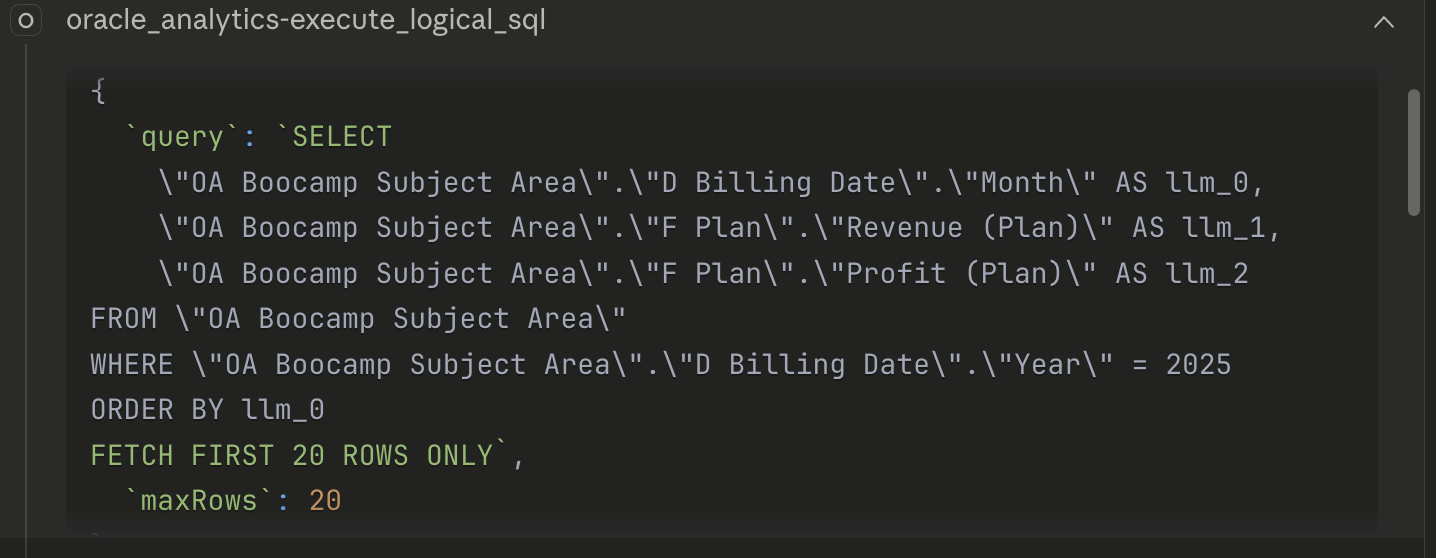

The thought process that follows shows how Claude tries to find some data. Until it ultimately runs a query without date constraints. After that the next query is focusing on 2025, where revenue and plan data actually exist.

The response for this last query is:

{

"metadata": {

"requestId": "12ea6730-07a0-4426-82e0-483a493169a8",

"batchSize": 1000,

"startTime": 1766495334726

},

"batches": [

{

"complete": true,

"cursorId": "806f23a2-1550-489f-9e30-5ab9e1bfcba0",

"batchNumber": 1,

"data": [

{

"llm_0": "2025 / 01",

"llm_1": 6229300.0,

"llm_2": 459492.5

},

{

"llm_0": "2025 / 02",

"llm_1": 6074600.0,

"llm_2": 450360.0

},

{

"llm_0": "2025 / 03",

"llm_1": 6108750.0,

"llm_2": 451884.5

},

{

"llm_0": "2025 / 04",

"llm_1": 7288200.0,

"llm_2": 538142.5

},

{

"llm_0": "2025 / 05",

"llm_1": 7591200.0,

"llm_2": 560164.0

},

{

"llm_0": "2025 / 06",

"llm_1": 8292350.0,

"llm_2": 611755.0

},

{

"llm_0": "2025 / 07",

"llm_1": 8537500.0,

"llm_2": 629779.5

},

{

"llm_0": "2025 / 08",

"llm_1": 8511000.0,

"llm_2": 627024.0

},

{

"llm_0": "2025 / 09",

"llm_1": 8430400.0,

"llm_2": 625841.0

},

{

"llm_0": "2025 / 10",

"llm_1": 8111500.0,

"llm_2": 598944.5

},

{

"llm_0": "2025 / 11",

"llm_1": 8815550.0,

"llm_2": 653583.5

},

{

"llm_0": "2025 / 12",

"llm_1": 9235200.0,

"llm_2": 689985.0

}

],

"hasMore": false,

"totalRecordsStreamed": 12,

"isComplete": true,

"recordsInBatch": 12,

"timestamp": 1766495334728

}

],

"status": {

"result": "success",

"error": false,

"message": "Streaming completed successfully"

},

"summary": {

"completed": true,

"totalBatches": 1,

"totalRecords": 12,

"endTime": 1766495334729,

"durationMs": 3

}

}

After this step, Claude retrieves also data for two more dimensions: D Products and D Geography, and also queries for revenue and plan details by products and geographies.

Once all data was retrieved, dashboard Plan vs Actual Performance was created.

Two observations:

- OAC was only used to retrieve data, all the business logic has been done outside OAC.

- I have observed an error in calculation, for which has Claude sincerely appologized (of course when I proved him wrong). In his second attempt it successfully calculated profit and profit margin totals correctly, errors which I observed by comparing data to the OAC workbook from the beginning.

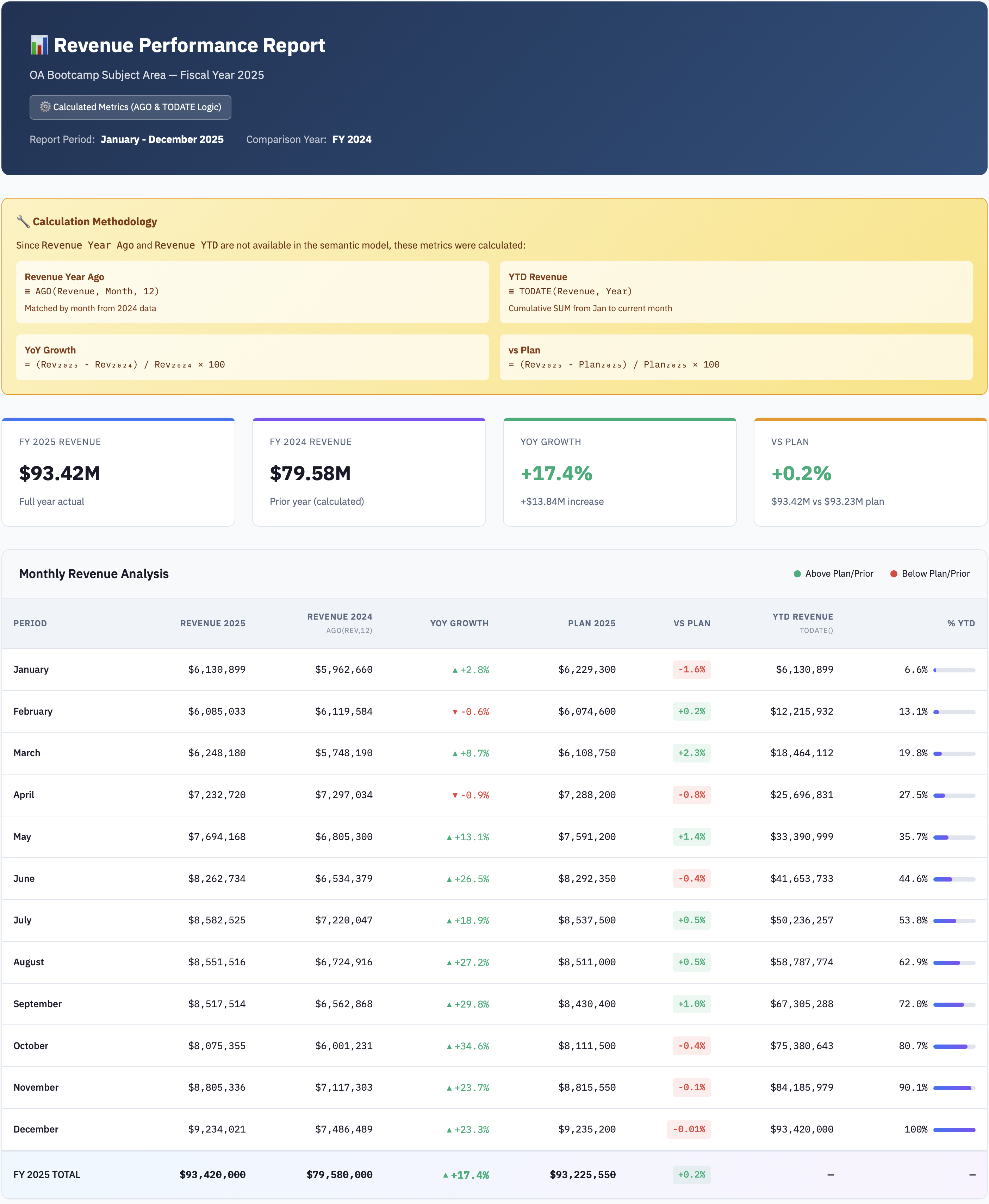

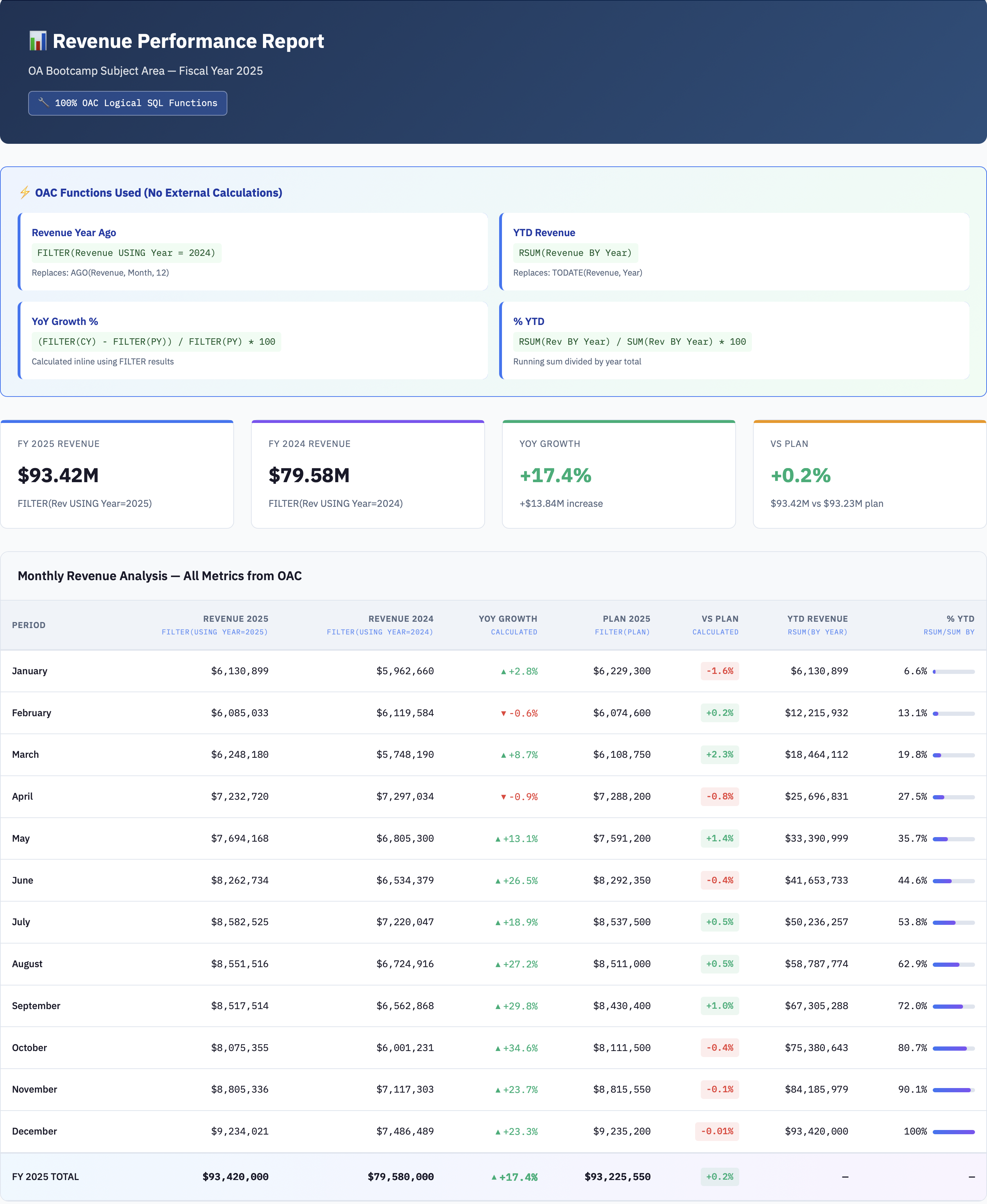

Revenue analysis

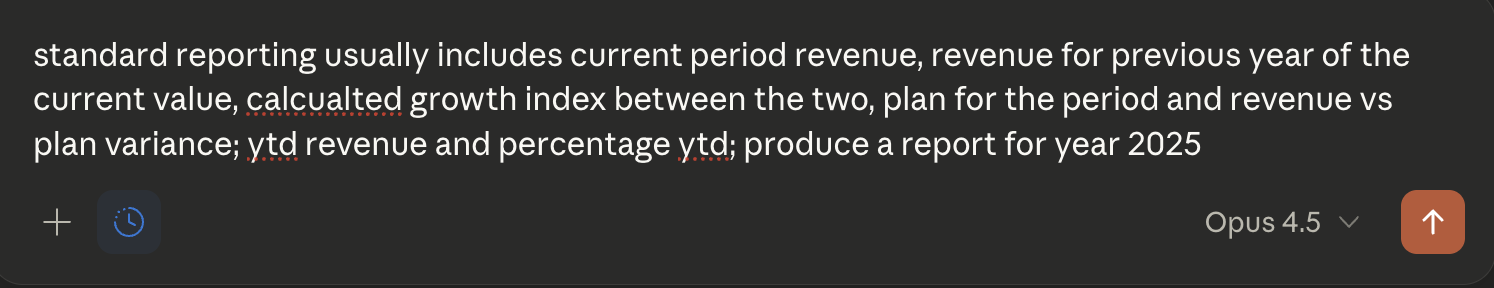

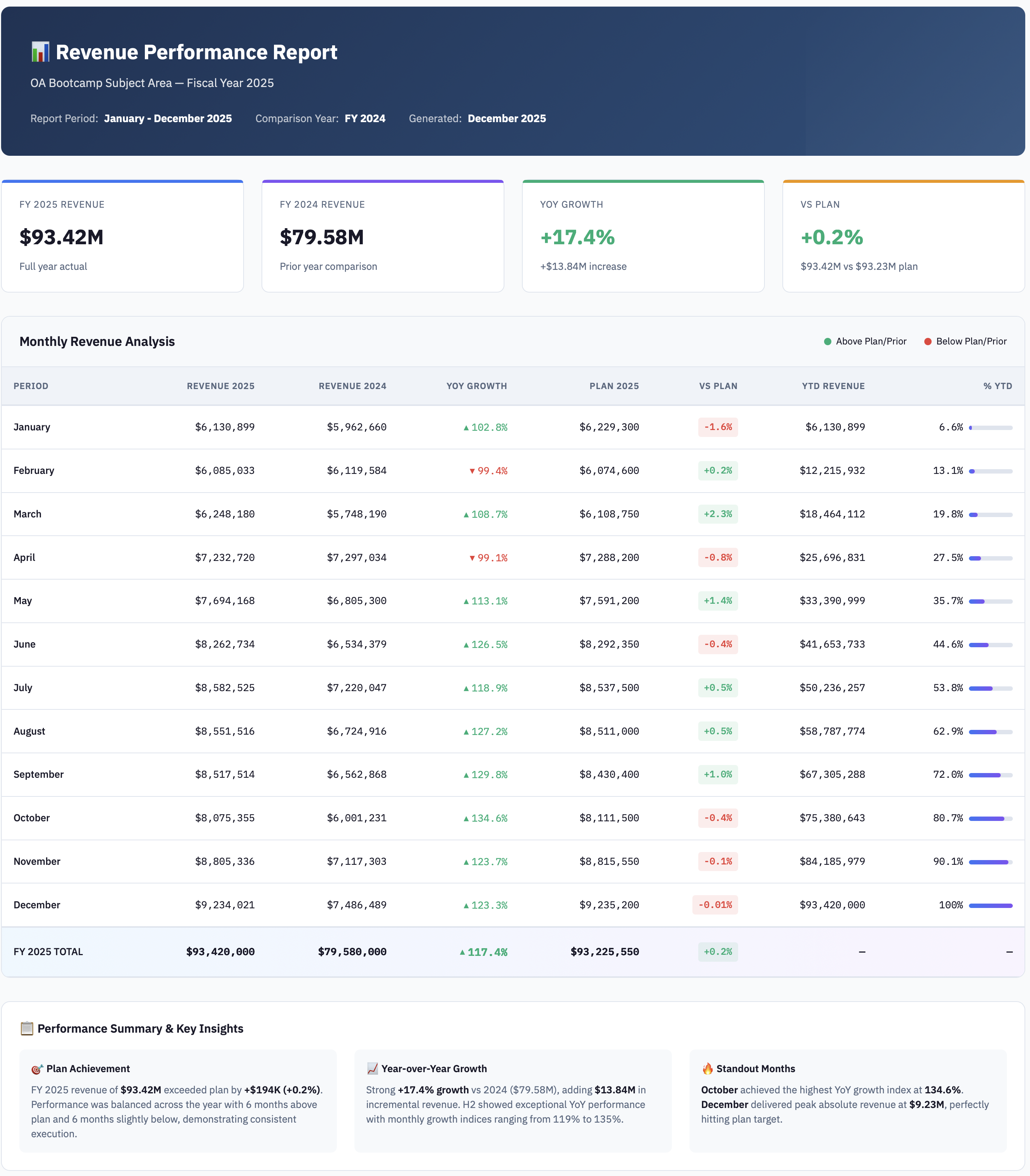

Question that bothered me was, why MCP server didn't use OAC more than just for basic data retrival. Maybe I should have asked that from it specifically? And because of that I ran another report:

Thought process produce a plan for my request:

The user wants a comprehensive standard report for 2025 that includes:

* Current period revenue (monthly breakdown for 2025)

* Revenue for previous year (same period in 2024)

* Growth index (YoY growth)

* Plan for the period

* Revenue vs Plan variance

* YTD revenue

* Percentage YTD (likely % of annual total or YTD vs prior year YTD)

Let me query the data to get all these metrics. I'll need to pull:

* 2025 monthly revenue

* 2024 monthly revenue (for YoY comparison)

* Plan data for 2025

* Calculate variances and YTD

Let me start by getting the actual and prior year data, then the plan data.

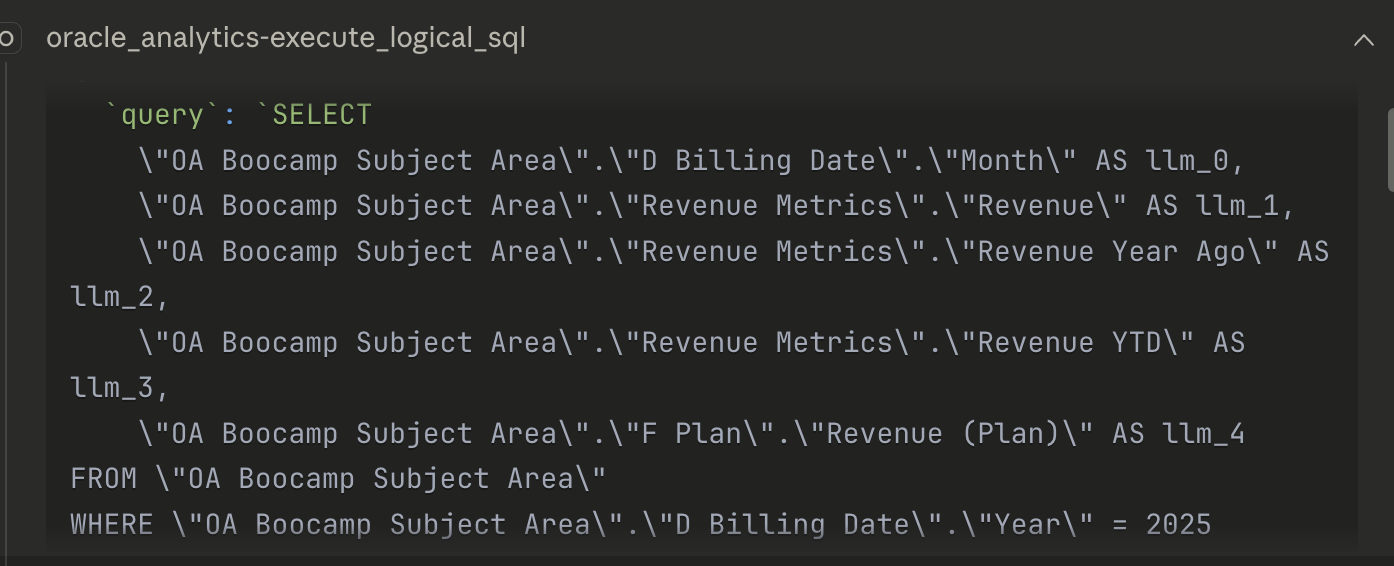

Since, it is already familiar with tables in subject area, Claude initiated a query using oracle_analytics-exdcute_logical_sql tool:

Query retreives the following data:

{

"metadata": {

"requestId": "6df595a8-c09d-43fb-a43d-6f26b1e7187c",

"batchSize": 1000,

"startTime": 1766496133247

},

"batches": [

{

"complete": true,

"cursorId": "1a05d938-88cc-4ffd-bbef-ed7e4a03b86c",

"batchNumber": 1,

"data": [

{

"llm_0": "2025 / 01",

"llm_1": 6130898.75,

"llm_2": 5962659.78,

"llm_3": 6130898.75,

"llm_4": 6229300.0

},

{

"llm_0": "2025 / 02",

"llm_1": 6085033.23,

"llm_2": 6119583.57,

"llm_3": 1.221593198E7,

"llm_4": 6074600.0

},

{

"llm_0": "2025 / 03",

"llm_1": 6248179.6,

"llm_2": 5748190.11,

"llm_3": 1.846411158E7,

"llm_4": 6108750.0

},

{

"llm_0": "2025 / 04",

"llm_1": 7232719.75,

"llm_2": 7297034.17,

"llm_3": 2.569683133E7,

"llm_4": 7288200.0

},

{

"llm_0": "2025 / 05",

"llm_1": 7694167.69,

"llm_2": 6805300.37,

"llm_3": 3.339099902E7,

"llm_4": 7591200.0

},

{

"llm_0": "2025 / 06",

"llm_1": 8262733.96,

"llm_2": 6534379.03,

"llm_3": 4.165373298E7,

"llm_4": 8292350.0

},

{

"llm_0": "2025 / 07",

"llm_1": 8582524.51,

"llm_2": 7220046.62,

"llm_3": 5.023625749E7,

"llm_4": 8537500.0

},

{

"llm_0": "2025 / 08",

"llm_1": 8551516.35,

"llm_2": 6724915.57,

"llm_3": 5.878777384E7,

"llm_4": 8511000.0

},

{

"llm_0": "2025 / 09",

"llm_1": 8517513.79,

"llm_2": 6562867.87,

"llm_3": 6.730528763E7,

"llm_4": 8430400.0

},

{

"llm_0": "2025 / 10",

"llm_1": 8075354.96,

"llm_2": 6001230.73,

"llm_3": 7.538064259E7,

"llm_4": 8111500.0

},

{

"llm_0": "2025 / 11",

"llm_1": 8805336.27,

"llm_2": 7117302.88,

"llm_3": 8.418597886E7,

"llm_4": 8815550.0

},

{

"llm_0": "2025 / 12",

"llm_1": 9234021.14,

"llm_2": 7486489.3,

"llm_3": 9.342E7,

"llm_4": 9235200.0

}

],

"hasMore": false,

"totalRecordsStreamed": 12,

"isComplete": true,

"recordsInBatch": 12,

"timestamp": 1766496133251

}

],

"status": {

"result": "success",

"error": false,

"message": "Streaming completed successfully"

},

"summary": {

"completed": true,

"totalBatches": 1,

"totalRecords": 12,

"endTime": 1766496133252,

"durationMs": 5

}

}

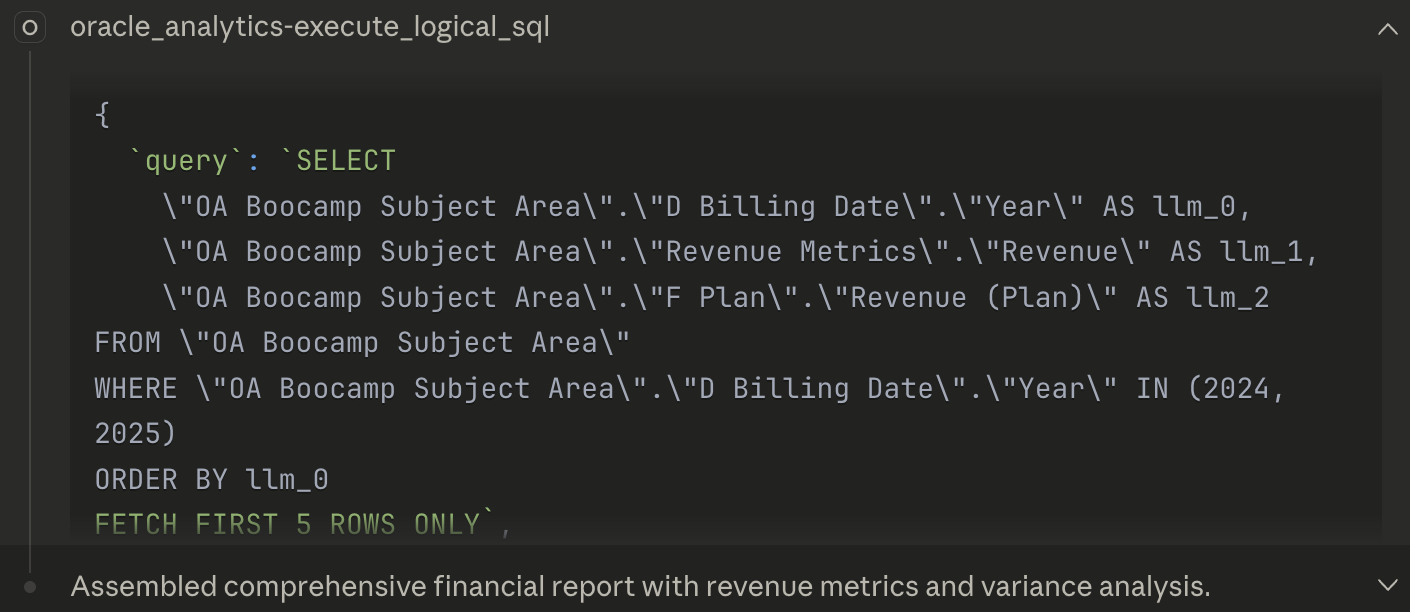

Then it triggers another query to get the annual totals for context:

{

"metadata": {

"requestId": "631b445c-1503-4339-b68c-ae9c466dead3",

"batchSize": 1000,

"startTime": 1766496141065

},

"batches": [

{

"complete": true,

"cursorId": "ec667120-0582-455a-a49b-0f5e386fdb2e",

"batchNumber": 1,

"data": [

{

"llm_0": 2024.0,

"llm_1": 7.958E7,

"llm_2": 7.927685E7

},

{

"llm_0": 2025.0,

"llm_1": 9.342E7,

"llm_2": 9.322555E7

}

],

"hasMore": false,

"totalRecordsStreamed": 2,

"isComplete": true,

"recordsInBatch": 2,

"timestamp": 1766496141068

}

],

"status": {

"result": "success",

"error": false,

"message": "Streaming completed successfully"

},

"summary": {

"completed": true,

"totalBatches": 1,

"totalRecords": 2,

"endTime": 1766496141069,

"durationMs": 4

}

}

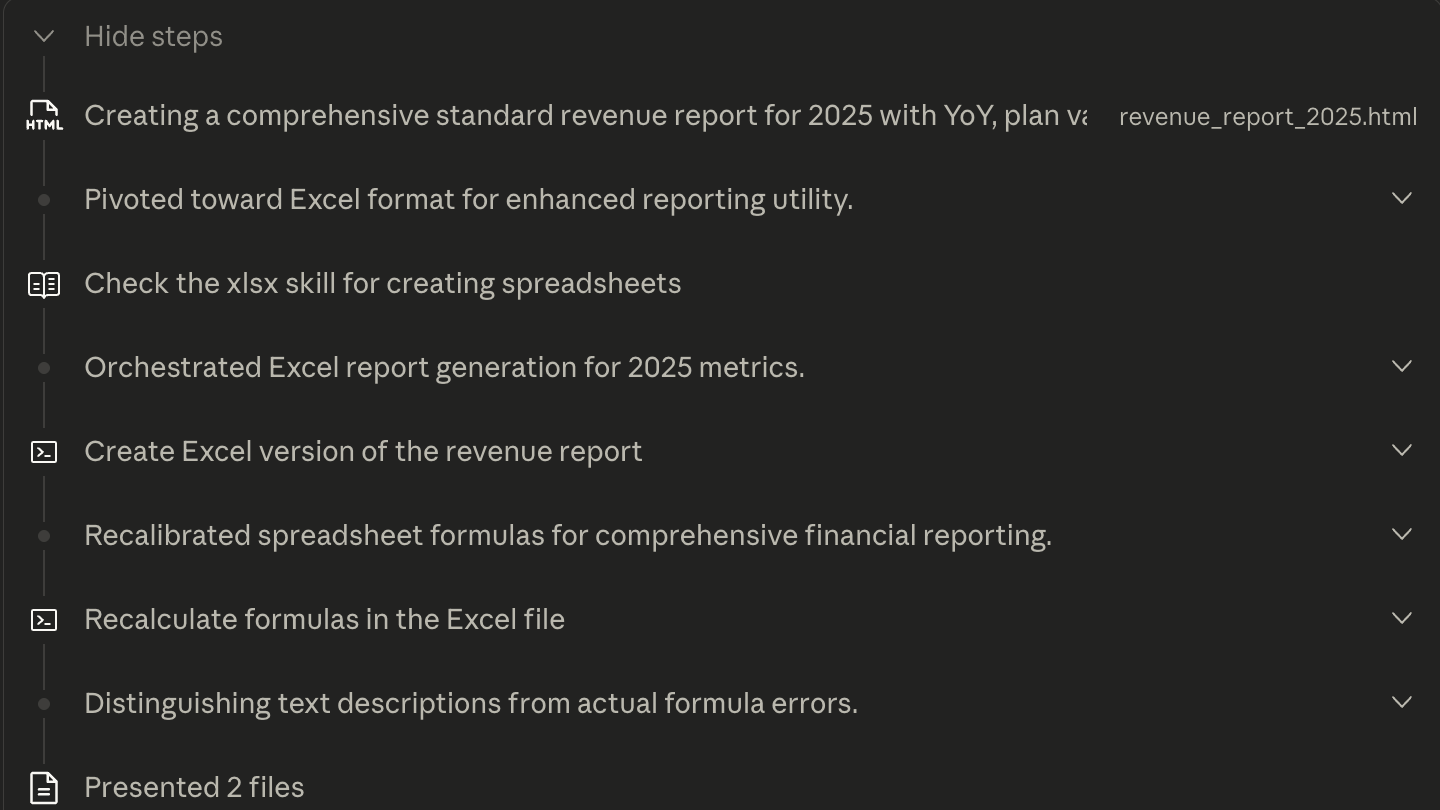

With this, it retrieved all required data and continued to produce a report:

From the next steps, we can clearly see, that again, Claude retrieved all neccesary data and after that it used other (python) tools to generate HTML report. It actually create an excel file with same data as well.

Check them here:

Even though report looks correct and very user friendly, it was calculated outside of OAC. Which could have led to potentially wrong calculations, or at least, there is no control over these calculations by the OAC.

Therefore, let's try if we could change this behaviour.

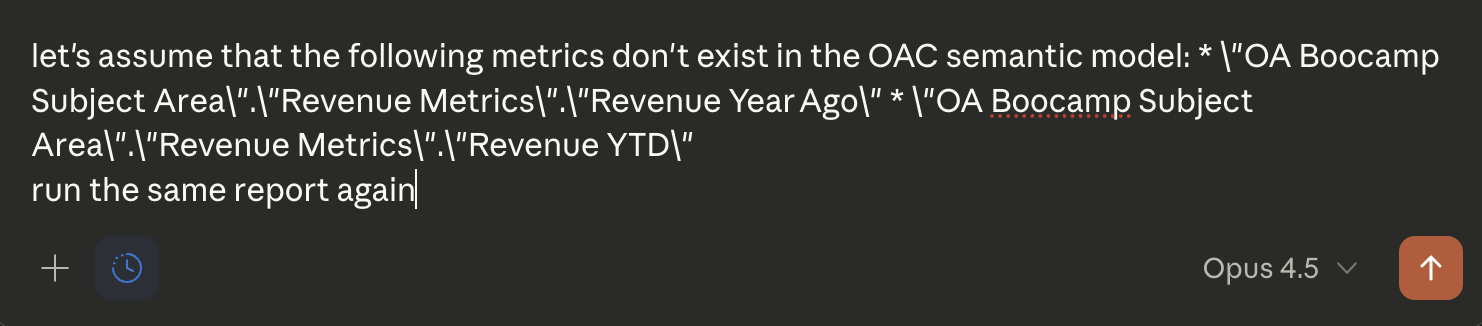

But, before that let me check something else. In our subject area we have two pre-calculated columns: Revenue Year Ago and Revenue YTD. In the query above, both have been retrieved by simply reading them by referencing them "OA Boocamp Subject Area"."Revenue Metrics"."Revenue Year Ago" and "OA Boocamp Subject Area"."Revenue Metrics"."Revenue YTD". But what if these two columns weren't pre-calcualted? Would OAC MCP Server be able to find a solution? Let's examine it:

And this was its first thought:

The user wants me to recreate the same report but without relying on the pre-calculated "Revenue Year Ago" and "Revenue YTD" metrics from the semantic model. I'll need to calculate these myself using:

Revenue Year Ago: Use the AGO() function to get prior year revenue

Revenue YTD: Use the TODATE() function to calculate year-to-date revenue

Let me query the data using these Logical SQL functions instead.

Great, it found way around to use OAC Time Series functions. Here is the query:

But, it seems, level has not been specified correctly! To be honest, this is often an issue also when creating time series calculations OAC, so I can't blame it not finding it correctly.

Anyway, based on error receieved related to using correct time levels with AGO() and TODATE() functions, alternative path was found. But using an outside python-code based calculation for YoY and YTD metrics. And HTML and Excel reports were generated successully.

So, after a very promissing start, we ended up with key calculations being run in a python program, Cluade has generated.

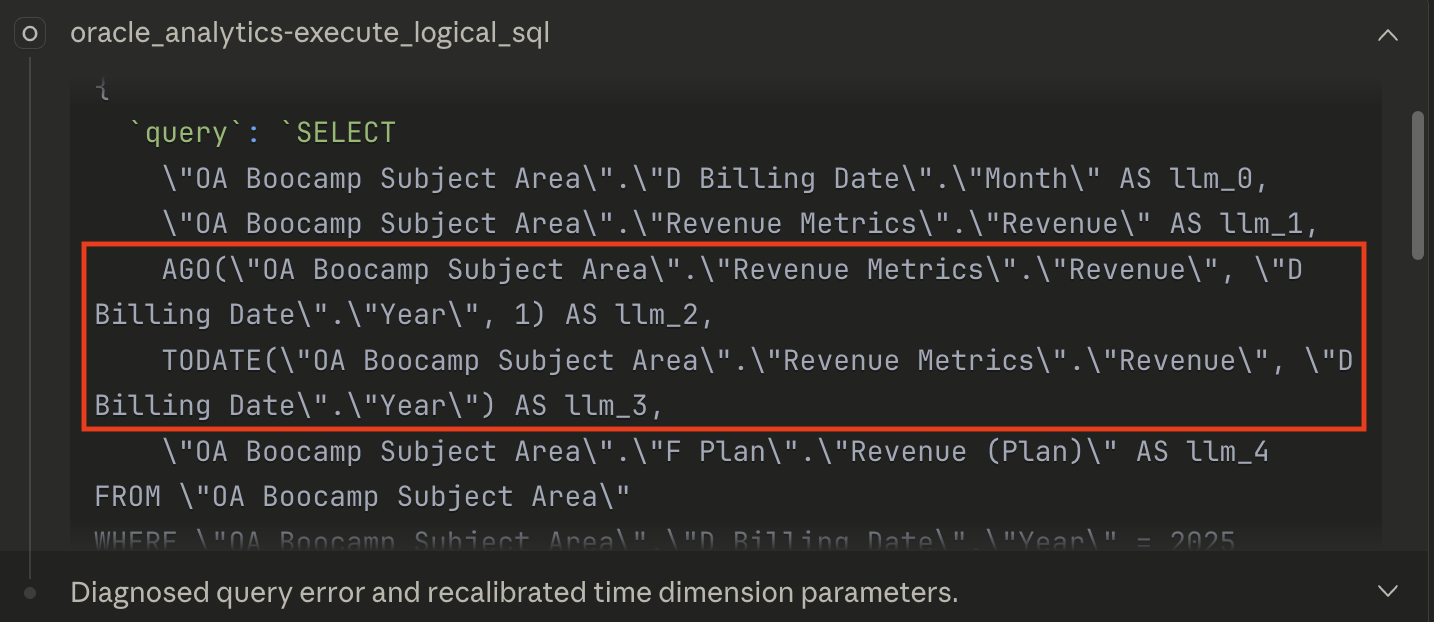

So, I decided for very direct and clear instruction:

Now, Claude decided to retry to run query with AGO() and TODATE() functions. Still no breakthrough. However, now it had to look for alternative. So it figured out that RSUM() would work for year-to-date and successfully verified FILTER() function to calculate year-on-year.

Further, it ran some other tests to verify if FILTER runs at aggregated level (i.e. Month) and it did. It also checked out if RIGHT() function works (to extract month number from the month (it is formatted as YYYY/MM*).

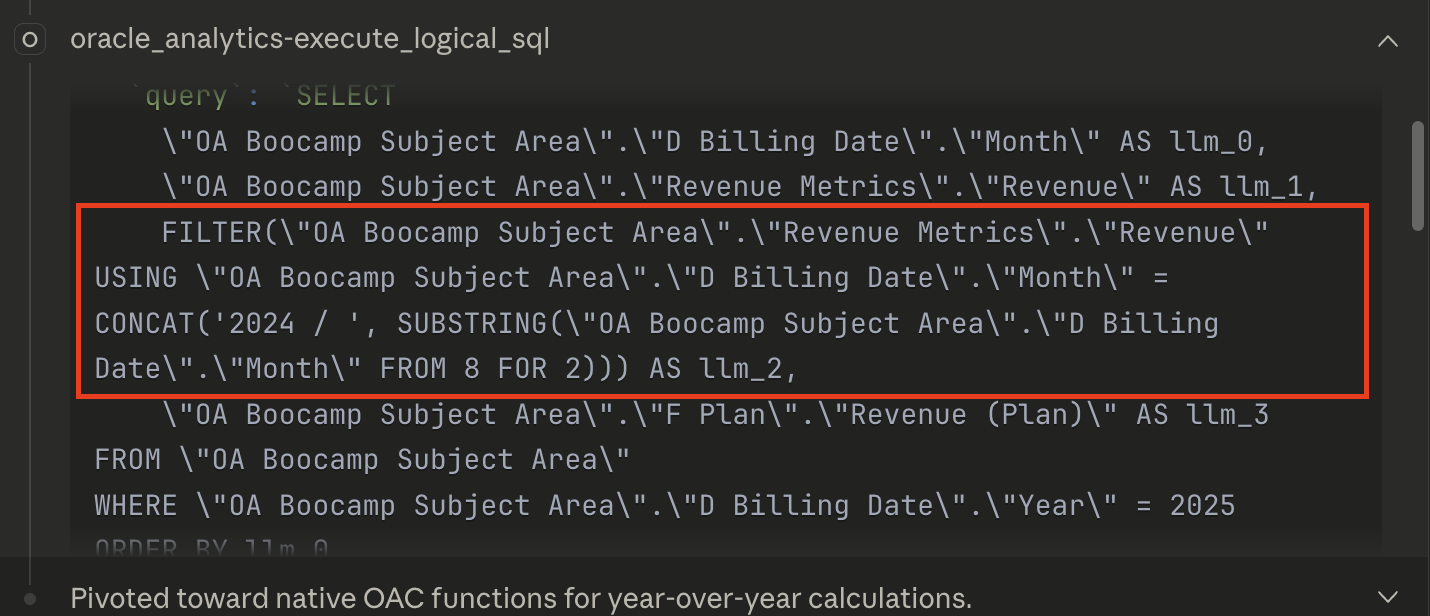

And finally, the query was produced and executed using oracle_analytics-execute_logical tool:

{

`query`: `SELECT

RIGHT(\"OA Boocamp Subject Area\".\"D Billing Date\".\"Month\", 2) AS llm_0,

FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025) AS llm_1,

FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2024) AS llm_2,

((FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025) -

FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2024)) /

FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2024)) * 100 AS llm_3,

FILTER(\"OA Boocamp Subject Area\".\"F Plan\".\"Revenue (Plan)\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025) AS llm_4,

((FILTER(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025) -

FILTER(\"OA Boocamp Subject Area\".\"F Plan\".\"Revenue (Plan)\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025)) /

FILTER(\"OA Boocamp Subject Area\".\"F Plan\".\"Revenue (Plan)\" USING \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025)) * 100 AS llm_5

FROM \"OA Boocamp Subject Area\"

WHERE \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" IN (2024, 2025)

ORDER BY llm_0

FETCH FIRST 15 ROWS ONLY`,

`maxRows`: 15

}

The query above produced most of required data, except YTD metrics, for which a separate query was produced:

{

`query`: `SELECT

\"OA Boocamp Subject Area\".\"D Billing Date\".\"Month\" AS llm_0,

RSUM(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" BY \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\") AS llm_1,

(RSUM(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" BY \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\") /

SUM(\"OA Boocamp Subject Area\".\"Revenue Metrics\".\"Revenue\" BY \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\")) * 100 AS llm_2

FROM \"OA Boocamp Subject Area\"

WHERE \"OA Boocamp Subject Area\".\"D Billing Date\".\"Year\" = 2025

ORDER BY llm_0

FETCH FIRST 15 ROWS ONLY`,

`maxRows`: 15

}

With all of these data, an excel file and a HTML files were generated: